Method

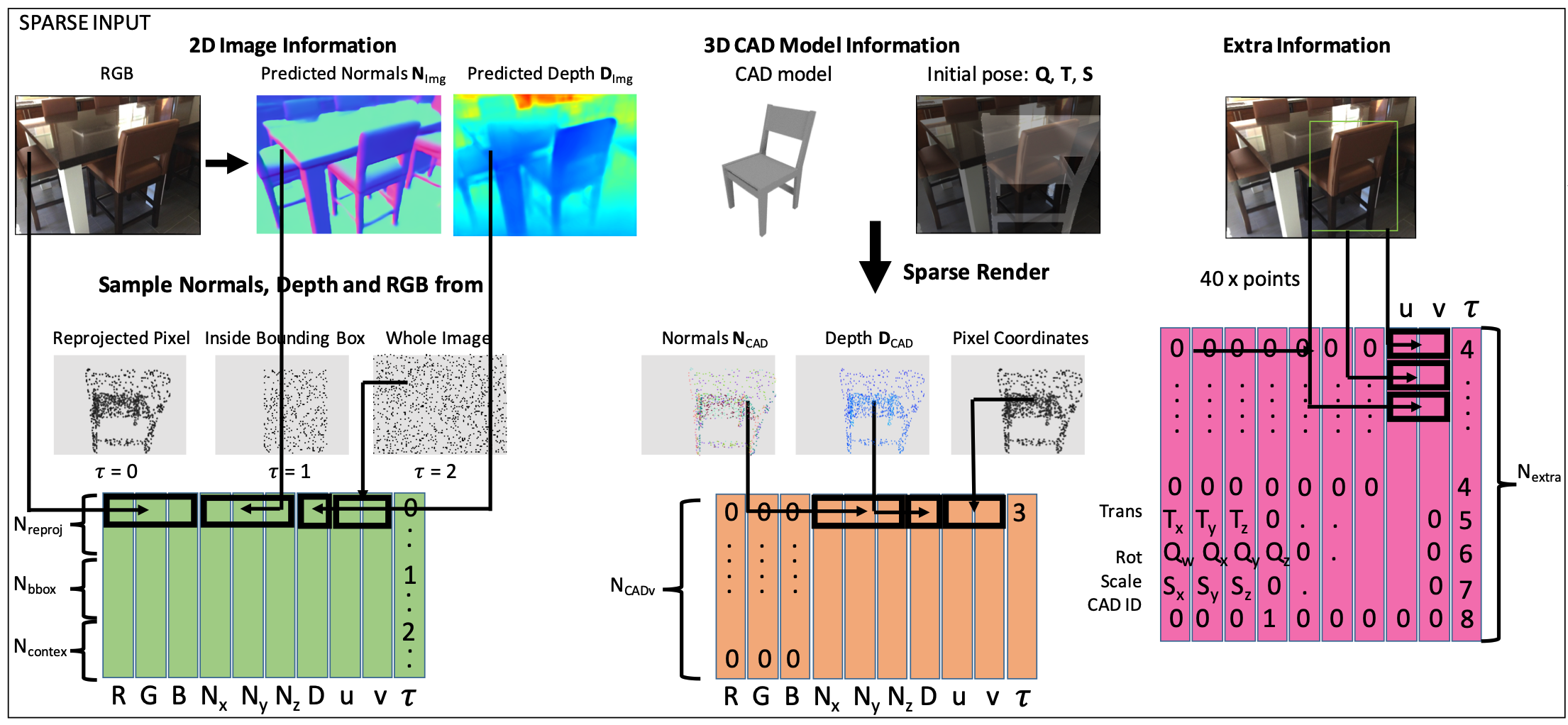

The input to the pose prediction network comes from three different sources: the 2D image, the 3D CAD model and extra information.

2D Image Information: We sample a set of pixels in the image for which we use their RGB values as well as the estimated depth and surface normals as input to the network. This information is stacked channelwise and we additionally stack the pixel coordinates (u,v) as well as a token tau to inform the network where this information is coming from in the image.

3D CAD Model Information: We sample a set of points (between 100 and 1000) and corresponding surface normals from the CAD model and use the current CAD model pose to reproject them into the image plane. Similar to the 2D image we stack all available information and pixel coordinate as well as a different token tau to inform the network that this information comes from the CAD model.

Extra Information: Additionally we explicitly provide the predicted bounding box, the current CAD model pose and ID in the database as input to the netowrk.

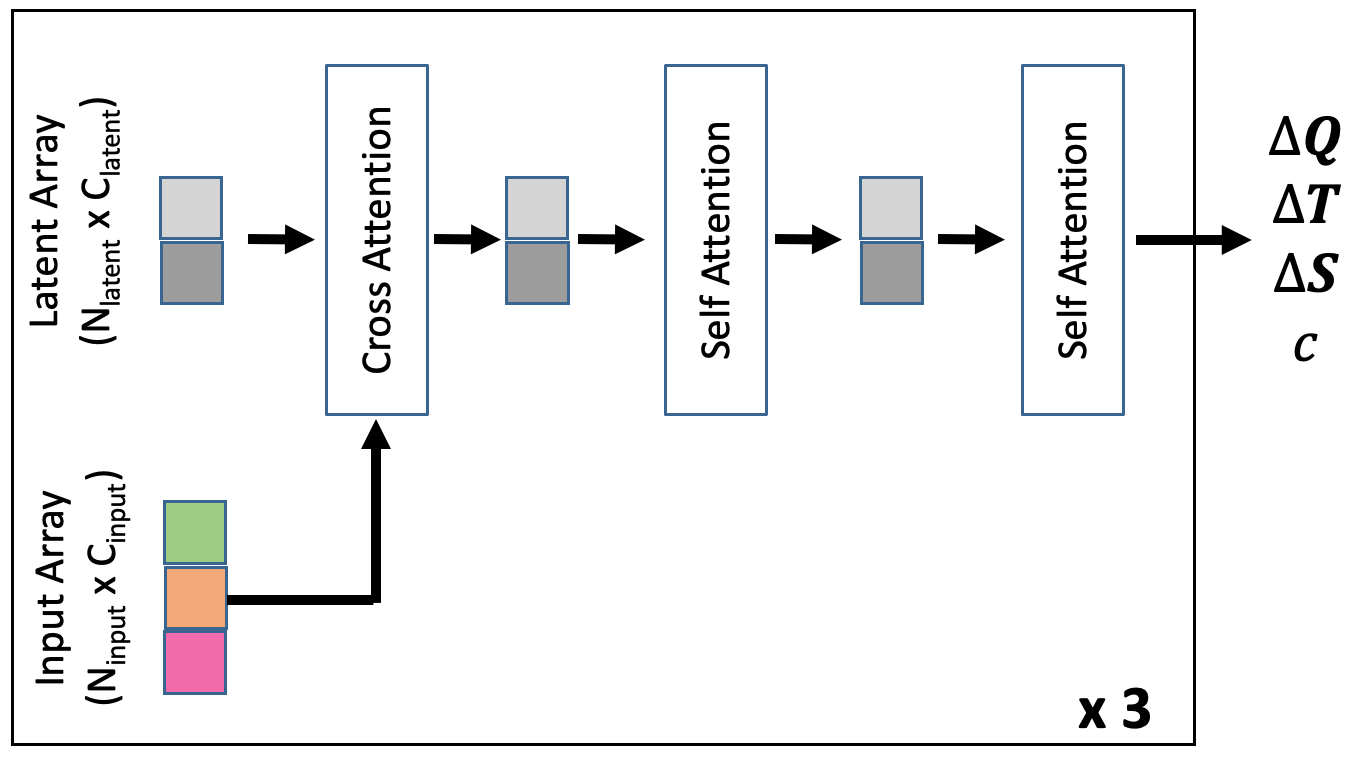

This combined information is the input to the pose update prediction network which predicts refinements Delta Q, Delta T and Delta S which update the rotation, translation and scale of the CAD model (In the figure above c is a classification score indicating how likely the current rotation is to be within 45° of the correct rotation. This is used to choose from what rotation to initialise the pose.). We use the Perceiver architecture whose cross-attention allows it to efficiently process large inputs.

We update the CAD models pose with the predicted refinements and sample the new inputs for the next iteration. This process is repeated 3 times.